🧠 AI Quiz

Think you really understand Artificial Intelligence?

Test yourself and see how well you know the world of AI.

Answer AI-related questions, compete with other users, and prove that

you’re among the best when it comes to AI knowledge.

Reach the top of our leaderboard.

ModelRed

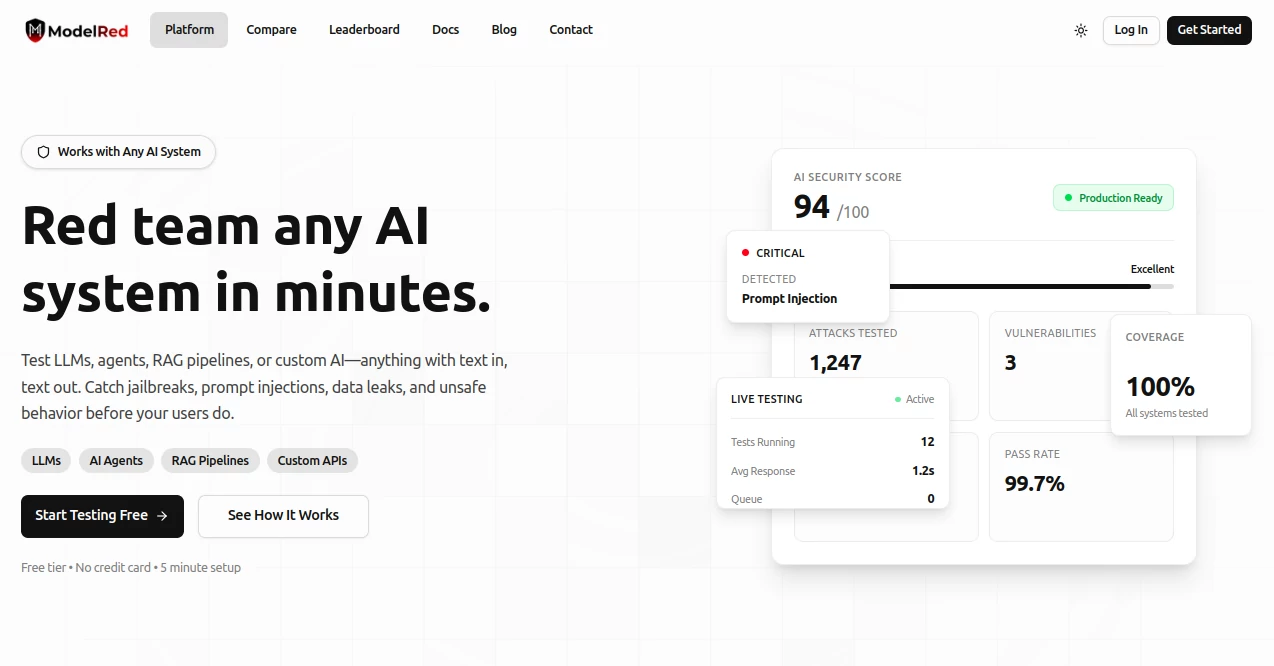

Red Team Any AI in Minutes

What is ModelRed?

ModelRed hands developers a battle-tested shield for every text-based AI, from chatbots to multi-agent pipelines. Drop in your endpoint, unleash thousands of real-world attacks, and walk away with a clear security score before a single user sees the product. Teams shipping AI swear it catches the sneaky bugs that slip past homemade checks.

Introduction

A handful of engineers got tired of watching jailbreaks wreck launches, so they built ModelRed to automate the grunt work of red-teaming. In under a year it’s grown from a weekend script to the daily driver for over five hundred squads, including startups racing to MVP and enterprises locking down production. What started as a simple “plug-and-poke” tool now ships evolving attack libraries that stay one step ahead of the latest tricks, giving builders the same firepower once reserved for red-team consultancies—minus the six-figure invoice.

Key Features

User Interface

Land on a single page: paste your API base URL, pick a preset or roll your own, and hit Run. Live logs stream verdicts in plain English—green for safe, red for leaked secrets—while a sidebar graphs your score against the leaderboard. Nothing to install; the browser tab is your cockpit.

Accuracy & Performance

Ten thousand-plus attack vectors, refreshed weekly, hit every corner: single-turn jailbreaks, slow-burn manipulations, tool-abuse loops. Most runs finish in under four minutes, even on beefy multi-agent chains. False positives hover below one percent because every failure ships with the exact prompt that broke the model.

Capabilities

Point it at OpenAI, Anthropic, your local Ollama box, or a custom FastAPI wrapper—same workflow. Compare versions side-by-side, gate CI/CD on a passing score, export JSON for auditors, or loop findings straight into LangSmith traces. Spanish, French, and code-switch attacks roll out next quarter.

Security & Privacy

Your prompts never touch public logs; everything stays in your workspace behind SOC-2-grade walls. API keys are encrypted at rest, audit trails name every user who triggered a run, and you can nuke a project with one click. No training data, no telemetry, no surprises.

Use Cases

Solo founders paste their MVP endpoint on day one and ship with a 94/100 badge. Security leads bake the gate into GitHub Actions so nothing merges below 85. Compliance crews export stamped reports for FedRAMP reviews. Prompt-engineers A/B test guardrails live, watching the score climb with every new rule.

Pros and Cons

Pros:

- Five-minute setup, zero SDK wrestling.

- Leaderboard shames weak models into better ones.

- Free tier never expires for dev work.

- Exportable proof that beats “trust me, bro” audits.

Cons:

- Vision or audio models still wait for the next module.

- Heavy concurrent runs queue behind paid plans.

- New attack types land weekly—stay on top of release notes.

Pricing Plans

Free forever for local and dev endpoints, 500 attacks monthly. Pro jumps to twenty-nine bucks for unlimited runs, private workspaces, and CI/CD hooks. Teams at ninety-nine add SSO, custom retention, and priority queue. All paid plans include a fourteen-day taste of the full stack, cancel any time.

How to Use ModelRed

Sign up with email, grab your API key, paste your endpoint URL, choose “Quick Scan” or “Deep Dive,” and watch the dashboard light up. Tweak the attack mix, rerun on a fresh model version, then download the PDF badge for your README. Hook the CLI into pre-commit for nightly peace of mind.

Comparison with Similar Tools

Open-source scanners need days of YAML wrangling; ModelRed fires in seconds. Enterprise suites lock you to one cloud vendor—this one hugs every provider and your laptop. Where others stop at jailbreak bingo, ModelRed scores the full OWASP AI Top-10 and hands you the fix recipe.

Conclusion

ModelRed turns AI security from a luxury line-item into a launch-day checkbox. Ship faster, sleep deeper, and let the attackers bounce off a wall you hardened in lunch break. As models grow wilder, this platform keeps the reins in the builders’ hands—one red run at a time.

Frequently Asked Questions (FAQ)

Does it work with my local Llama?

Yes—spin up Ollama, grab the OpenAI-compatible URL, and test away.

What counts as an “attack”?

One prompt sent to your model; bundles of 50+ run in parallel.

Can I add my own attacks?

Pro and up—upload YAML, version-control in Git, done.

Is my data used to train anything?

Never. Full stop.

How fresh are the attack vectors?

Curated weekly from public jailbreak repos and our bug-bounty feed.

AI Testing & QA , AI API Design , AI Developer Tools .

These classifications represent its core capabilities and areas of application. For related tools, explore the linked categories above.

ModelRed details

This tool is no longer available on submitaitools.org; find alternatives on Alternative to ModelRed.

Pricing

- Free

Apps

- Web Tools