🧠 AI Quiz

Think you really understand Artificial Intelligence?

Test yourself and see how well you know the world of AI.

Answer AI-related questions, compete with other users, and prove that

you’re among the best when it comes to AI knowledge.

Reach the top of our leaderboard.

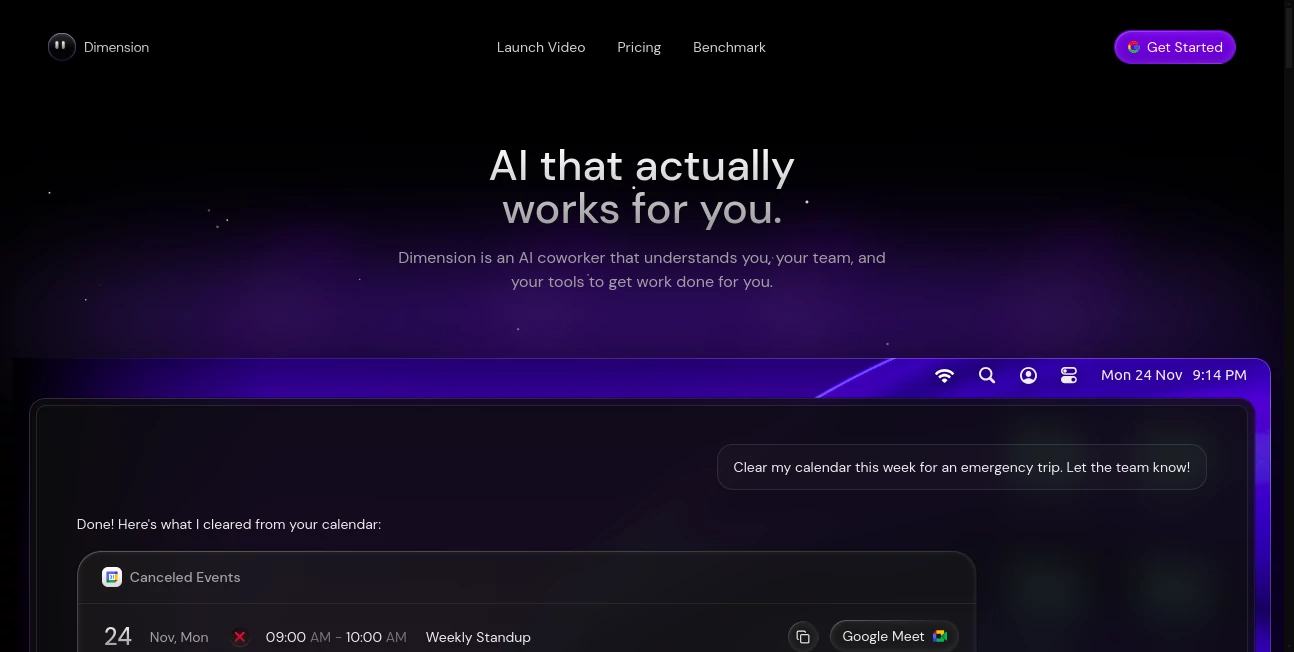

Dimension

What is Dimension?

Dimension steps in as a sharp companion for developers wrestling with the quirks of machine learning setups, offering a streamlined way to evaluate and tweak models before they hit production. This platform turns the foggy guesswork of testing into clear, data-backed decisions, helping teams spot weaknesses and strengths with ease. Many who've integrated it into their workflows share how it shaved weeks off debugging cycles, turning potential pitfalls into polished performers that run smooth under real-world loads.

Introduction

Dimension emerged from the trenches of AI development, where a group of engineers grew tired of piecing together scattered benchmarks and brittle scripts that crumbled under scale. They launched it a few years back as a unified spot to measure model health, and it quickly drew in startups and labs chasing reliable results without the ritual of reinvention. What began as a tool for internal tweaks has blossomed into a go-to for those fine-tuning everything from chat responses to image classifiers, with users swapping stories of breakthroughs that came from its unvarnished insights. At its heart, it's about bridging the gap between lab promise and live reliability, making sure your models don't just work in theory but thrive in the thick of things.

Key Features

User Interface

The dashboard unfolds like a well-thumbed logbook, with drag-and-drop zones for your datasets and a central pane that lights up as you load models for comparison. Tabs for metrics and reports slide in without fanfare, and the visual charts adjust on the fly to your tweaks, keeping things intuitive even when juggling multiple runs. It's the kind of setup that feels steady after a single spin, rewarding quick dives without burying you in menus or misleading markers.

Accuracy & Performance

It cuts through the noise with benchmarks that hold true across hardware shifts, delivering scores that mirror real deployments down to the decimal. Runs wrap efficiently, scaling from toy tests to full fleets without choking, and the breakdowns flag outliers with precision that saves hours of head-scratching. Teams often highlight how it uncovers subtle drifts that sneak past manual checks, ensuring your evals stay sharp as the models evolve.

Capabilities

From slicing through custom metrics to tracing biases in outputs, it arms you with tools that go beyond basic accuracy, like robustness probes and drift detectors that keep watch over time. You can chain evals into pipelines for automated gates, or export findings to dashboards that sync with your stack, handling everything from text classifiers to vision tasks. The depth shines in collaborative modes, where shared runs let squads align on what matters without email chains.

Security & Privacy

Your datasets and model weights get wrapped in tight controls, with options to run evals in isolated pockets that wipe clean after the job. It follows the playbook on access tiers and audit trails, letting you gate sensitive runs behind team keys or guest views. Developers lean on that solid footing, knowing proprietary pieces stay put while still unlocking the full power of collective checks.

Use Cases

Research outfits benchmark new architectures against baselines, spotting edge cases that tweak papers into publications. Product teams gate releases with pre-deploy scans, catching regressions that could sour user trust. Consultants spin quick audits for clients, turning vague concerns into concrete fixes that build lasting ties. Even open-source maintainers use it to crowdsource evals, fostering healthier repos without the solo slog.

Pros and Cons

Pros:

- Unified hub that trims the tool sprawl, saving setup headaches.

- Deep dives into metrics that reveal hidden hurdles early.

- Scales seamless from solo tests to squad syncs.

- Exports and integrations that slot into any workflow.

Cons:

- Learning the full metric menu takes a few rounds for new hands.

- Cloud-bound runs might pinch for air-gapped outfits.

- Advanced probes push toward paid paths for heavy hitters.

Pricing Plans

It opens with a free layer for light lifts, covering core evals on small sets to get your feet wet. From there, pro tiers kick in around the mid-twenties monthly for unlimited runs and custom dashboards, stepping to enterprise at triple that for dedicated support and scale. Annual commitments carve off a chunk, and trials grant full access for a fortnight, easing the leap without blind bets.

How to Use Dimension

Sign up and snag your API key, then hook it into your script or dashboard with a simple import. Load your model and data slices, pick the probes that fit—like accuracy splits or fairness checks—and fire away to watch reports roll in. Review the visuals, tweak thresholds for alerts, and loop findings back into your loop for the next iteration. It's a rhythm that builds quick, turning one-off checks into ongoing guardians.

Comparison with Similar Tools

Where some platforms pile on flashy fronts but falter on fine points, Dimension digs deeper into dev needs, though those might suit if you're after zero-code zips. Against open kits that demand your own glue, it offers ready rigor without the rigmarole, but could yield to tinkerers who thrive on tailoring. It holds its ground for teams craving consistency over constant config, blending breadth with bite in a crowded eval lane.

Conclusion

Dimension stands as a steadfast scout in the shifting sands of model building, arming you with evals that illuminate paths forward. It transforms the art of assessment from afterthought to anchor, ensuring your creations carry weight where it counts. As AI ambitions keep climbing, this tool tunes the trajectory, whispering warnings and wins that keep you ahead of the curve.

Frequently Asked Questions (FAQ)

What models does it handle?

From transformers to tabular trainers, it covers the common crew with ease.

Can I run it offline?

Core calls need the cloud, but exports let you poke further in private.

How customizable are the reports?

From raw tables to tailored tiles, it bends to your brand's beat.

Supports team shares?

Yes, with role rings that ring-fence views for safe collab.

Quick start for beginners?

Templates and tours guide the green through to glowing results.

AI Testing & QA , AI Analytics Assistant , AI Developer Tools , AI Monitor & Report Builder .

These classifications represent its core capabilities and areas of application. For related tools, explore the linked categories above.

Dimension details

This tool is no longer available on submitaitools.org; find alternatives on Alternative to Dimension.

Pricing

- Free

Apps

- Web Tools

Categories

Dimension Alternatives Product

JSON to TOON…