🧠 AI Quiz

Think you really understand Artificial Intelligence?

Test yourself and see how well you know the world of AI.

Answer AI-related questions, compete with other users, and prove that

you’re among the best when it comes to AI knowledge.

Reach the top of our leaderboard.

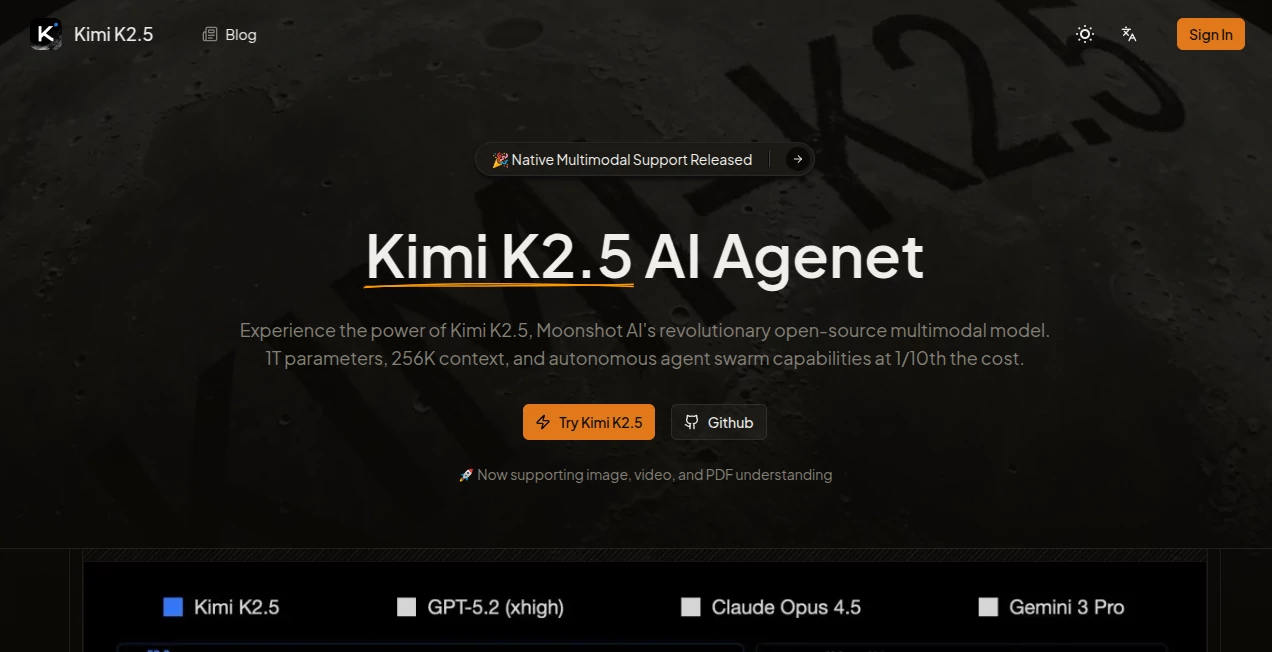

Kimi K2.5

What is Kimi K2.5?

Sometimes you just need an AI that doesn't quit when things get complicated. This one feels like it brings a whole team along—multiple agents working in parallel, tackling pieces of a tough problem at once, then pulling everything together into something coherent and useful. I threw a messy codebase at it with a vague "make this better" prompt, and the result was a refactored version that actually made sense, with explanations that showed real understanding. It's the kind of help that makes you wonder how you managed without it.

Introduction

Large language models are everywhere now, but very few feel genuinely built for heavy lifting. This model stands apart with its massive 1-trillion-parameter scale yet smart Mixture-of-Experts design that only activates what it needs, keeping costs and speed sane. Developers, researchers, and startups are quietly raving about how it handles enormous context—up to 256K tokens—without losing track, and how its agent swarm can divide a complex task into dozens or even hundreds of coordinated steps. What really hits home is the efficiency: it delivers frontier-level performance at a fraction of the price others charge, making serious AI work accessible instead of a luxury.

Key Features

User Interface

Access is clean and straightforward—whether through the web playground, API calls, or local deployment, the experience stays focused. Prompts go in, responses come back fast, and when using the agent mode you can watch sub-tasks spin up and resolve in parallel without drowning in logs. It's designed so you spend time thinking about the problem, not wrestling with the tool.

Accuracy & Performance

Benchmarks back up the claims: high scores on tough reasoning tests like GPQA-Diamond and MMMU-Pro, and it handles visual coding tasks (generating UI code from screenshots) with impressive fidelity. In real use, the 32B active parameters per inference keep latency low while delivering thoughtful, accurate output. Long documents or codebases stay coherent across the full context window—no sudden forgetting halfway through.

Capabilities

Multimodal from the ground up: text, images, video frames, PDFs—all processed natively without switching models. Visual coding turns mockups into production-ready code. The agent swarm coordinates up to 100 sub-agents for parallel tool calls, slashing runtime dramatically on intricate workflows. 256K context lets you feed entire projects or lengthy reports and get meaningful synthesis. Open-weight availability means you can run it locally with quantization for sensitive work.

Security & Privacy

When you host it yourself with INT4 quantization, your data never leaves your machine. Even cloud access follows strict privacy practices, and the open nature lets teams audit and control every layer. For companies handling proprietary code or research, that level of ownership is a rare and welcome relief.

Use Cases

A solo developer feeds a sprawling legacy codebase and asks for modernization suggestions—gets structured refactoring steps with explanations. A research team analyzes a 2-million-character document dump and receives concise insights with cited sections. Startups prototype features by describing UI screenshots and watching full frontend code appear. Automation enthusiasts set up agent teams for multi-step data pipelines that run faster and more reliably than single-threaded approaches. It's the versatility that keeps surprising people.

Pros and Cons

Pros:

- Huge context window that actually remembers everything.

- Agent swarm turns complex tasks into parallel wins.

- Multimodal handling without model-switching friction.

- Frontier performance at a fraction of the usual cost.

Cons:

- Full power needs serious hardware for local runs.

- Cloud pricing still adds up on very heavy usage.

Pricing Plans

At $0.39 per million input tokens, it's positioned as one of the most cost-effective frontier models available—roughly 4x cheaper than GPT-4o and nearly 10x less than some newer flagships. Open-weight access means you can run it locally for zero marginal cost after setup. No hidden tiers or aggressive upselling; just transparent, competitive pricing that respects heavy users.

How to Use Kimi K2.5

Start in the playground: type your prompt, upload images/PDFs/video frames if needed, adjust parameters if you want, and hit send. For agent swarm tasks, describe the goal and let it break things down into parallel steps. API users integrate with standard calls, passing multimodal inputs and receiving structured outputs. Local deployment follows the open-weight repo—quantize to INT4, spin up with vLLM or SGLang, and run privately. Quick iterations and long context make experimenting feel natural.

Comparison with Similar Tools

Compared to closed flagships, this delivers comparable or better multimodal and reasoning performance at dramatically lower cost. Against other open models, the agent swarm and visual coding capabilities stand out—few match the parallel coordination or UI-from-screenshot accuracy. It strikes a rare balance: raw power without the price tag, open access without sacrificing frontier-level results.

Conclusion

Powerful AI doesn't have to come with a luxury price or closed doors. This model proves that scale, efficiency, and openness can coexist, giving developers and researchers a serious tool that doesn't punish them for asking big questions. Whether you're refactoring code, analyzing documents, or building agent-driven workflows, it feels like a partner that scales with your ambition—quietly capable, surprisingly affordable, and genuinely forward-looking.

Frequently Asked Questions (FAQ)

How much context can it really handle?

256K tokens—enough for entire codebases or very long documents without losing earlier details.

Does it run locally?

Yes, open-weight with INT4 quantization for efficient private deployment.

What's the agent swarm good for?

Breaking complex tasks into parallel sub-tasks—up to 100 agents, 1500 tool calls, massive runtime savings.

How good is the visual coding?

Generates complete, production-ready UI code directly from screenshots or mockups.

Is it really that cheap?

$0.39 per million input tokens—significantly lower than most frontier alternatives.

Large Language Models (LLMs) , AI Research Tool , AI Code Generator , AI Developer Tools .

These classifications represent its core capabilities and areas of application. For related tools, explore the linked categories above.

Kimi K2.5 details

Pricing

- Free

Apps

- Web Tools