🧠 AI Quiz

Think you really understand Artificial Intelligence?

Test yourself and see how well you know the world of AI.

Answer AI-related questions, compete with other users, and prove that

you’re among the best when it comes to AI knowledge.

Reach the top of our leaderboard.

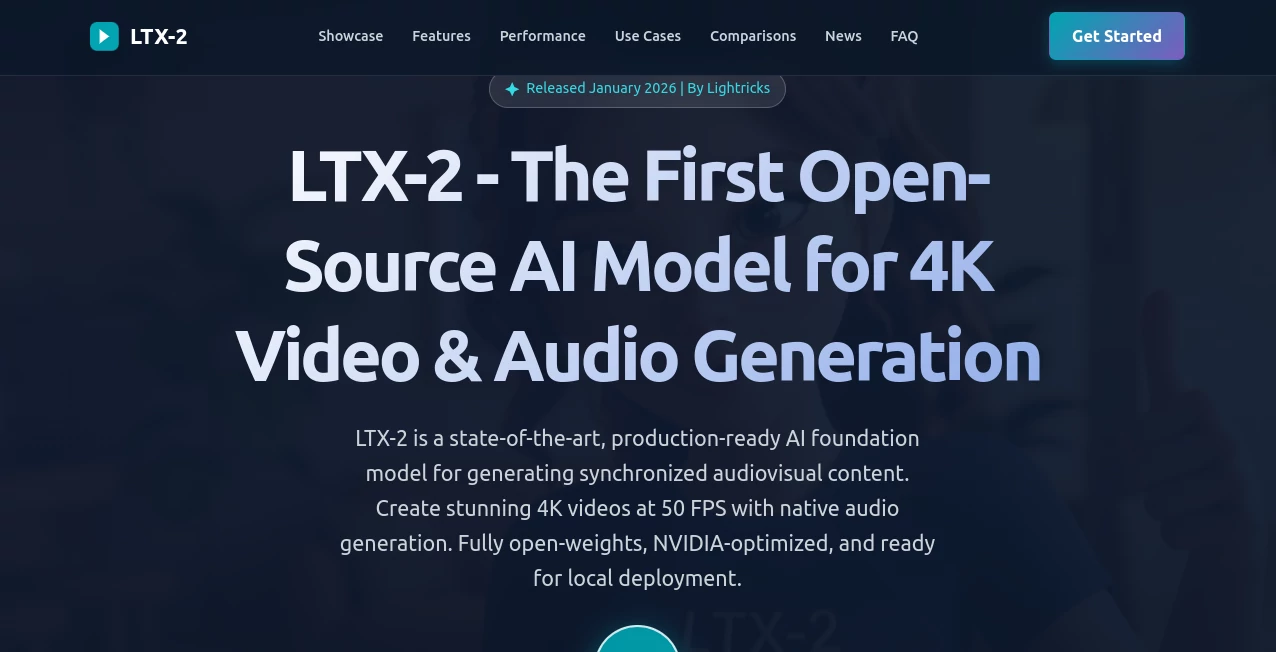

LTX-2

What is LTX-2?

There's a quiet thrill in watching a simple line of text or a still frame burst into a full-moving scene, complete with sounds that fit like they were always there. This model delivers that spark in spades, churning out crisp 4K clips with synced dialogue, ambience, and music that feel thoughtfully put together. I've spent evenings playing with prompts, and the way it holds onto character details across cuts or nails cinematic moves from direct commands keeps pulling me back—it's the kind of tool that makes ambitious ideas feel within reach without the usual roadblocks.

Introduction

Built from the ground up as an open-source powerhouse, this 19-billion-parameter beast stands out by handling video and audio in one smooth pass, pushing boundaries with native 4K at up to 50 frames per second and clips stretching to 20 seconds. What sets it apart is the freedom: fully Apache-licensed weights, code, and pipelines mean you run it locally, fine-tune with LoRAs for custom styles, or deploy wherever. Creators rave about the temporal coherence that keeps things steady, and the NVIDIA tweaks that slash VRAM needs while boosting speed—it's a genuine leap for open tools, blending pro specs with community-driven flexibility that invites tinkering without limits.

Key Features

User Interface

Jump into browser demos or roll your own with ComfyUI workflows—prompts flow naturally, conditioning on images or keyframes feels intuitive, and previews build quick confidence. The gallery showcases real outputs that inspire, while local setups give that hands-on control devs love. It's straightforward enough for a prompt-and-go session, yet deep for workflow integrations that stick.

Accuracy & Performance

Motion stays coherent over longer runs, audio locks in perfectly—dialogue crisp, effects timed just right—and optimizations mean 3x faster spins on modern cards with far less memory hunger. A 10-second 4K piece can wrap in minutes on solid hardware, holding quality that punches with closed rivals. From tests, it follows imperative cues like camera pans reliably, delivering that polished feel without endless rerolls.

Capabilities

Text or image starters bloom into dynamic clips with multi-keyframe guidance, extension forward or back, and video-to-video tweaks that respect sources. Joint audiovisual output means no post-sync headaches, LoRA support for personal touches, and resolutions climbing to true 4K with high frame rates. It's built for everything from quick concepts to refined sequences, all self-hostable for ultimate control.

Security & Privacy

Run everything on your own rig—no cloud mandatory—so data stays put, ideal for sensitive or proprietary work. Open nature means you audit and tweak as needed, with self-hosted privacy that puts you in charge from start to finish.

Use Cases

Stock libraries bulk fresh footage for editors, marketers craft synced ads that convert, animators pre-vis complex beats, and educators simulate scenarios that engage. Researchers train on synthetic data, while indie filmmakers storyboard with reference fidelity—it's those practical bridges from idea to asset that make it indispensable.

Pros and Cons

Pros:

- True open-source freedom for tweaks and local runs without gates.

- Native audio-video sync that saves real production time.

- Top-tier specs like 4K and long coherence in the open realm.

- Efficient optimizations that play nice with consumer hardware.

Cons:

- Needs solid GPU muscle for peak speeds and resolutions.

- Prompting rewards direct commands over vague descriptions.

Pricing Plans

Completely free under Apache 2.0—download weights and code, run locally or on your cloud, no subscriptions or hidden fees. It's pure open access, with community integrations adding polish without costing extra.

How to Use LTX-2

Grab the repo, set up your environment with CUDA-ready gear, load the pipeline, and feed a prompt with optional images or keyframes. Specify frames, resolution, and go—preview locally, iterate with LoRAs for style, extend clips as needed. ComfyUI flows make it even smoother for visual tweaking, turning a basic setup into a custom powerhouse.

Comparison with Similar Tools

Closed options might edge on polished ease, but few match this one's open transparency, raw specs, and sync depth without paywalls. It's the choice for tinkerers who want control and pros chasing efficiency, often outperforming in flexibility where others lock features away.

Conclusion

This model pushes open AI video into exciting territory, blending high-end capabilities with the freedom to make it yours. It's a catalyst for creators ready to explore motion and sound without compromises, proving community-driven tech can rival the big players. Pull it down, prompt something wild, and see where the frames take you.

Frequently Asked Questions (FAQ)

What hardware do I need?

NVIDIA RTX 40/50 series with 16GB+ VRAM shines, but optimizations stretch further.

Max length and quality?

Up to 20 seconds in native 4K at 50 FPS, with audio baked in.

Can I fine-tune it?

LoRA support makes custom styles or subjects straightforward.

Commercial use allowed?

Fully under Apache 2.0—go for it.

Audio always included?

Joint generation means yes, synced from the prompt.

AI Image to Video , AI Music Video Generator , AI Text to Video , AI Video Generator .

These classifications represent its core capabilities and areas of application. For related tools, explore the linked categories above.

LTX-2 details

This tool is no longer available on submitaitools.org; find alternatives on Alternative to LTX-2.

Pricing

- Free

Apps

- Web Tools