🧠 AI Quiz

Think you really understand Artificial Intelligence?

Test yourself and see how well you know the world of AI.

Answer AI-related questions, compete with other users, and prove that

you’re among the best when it comes to AI knowledge.

Reach the top of our leaderboard.

seedance2

What is seedance2?

There’s something genuinely exciting about typing a short description, dropping in a couple of reference images, and watching a smooth, multi-shot video unfold that actually feels like it was directed with intention. This tool turns that idea into reality without the usual frustration of mismatched styles or jerky motion. I’ve seen creators go from a rough concept scribbled on a napkin to a polished clip ready for social in under ten minutes—characters stay consistent, camera moves feel purposeful, and the whole thing has a cinematic rhythm that’s hard to fake. It’s the kind of leap that makes you want to keep experimenting just to see how far the next prompt can go.

Introduction

Short-form video dominates attention these days, and standing out means telling a story that flows naturally across multiple shots. This platform quietly solves that by letting you build narrative sequences with precise control over subjects, style, motion, and even sound—all from simple inputs. What draws people in is the freedom: combine text prompts with images, short video clips, and audio references, then watch the AI stitch everything into coherent scenes that respect your vision. Early users quickly discovered they could prototype ideas, create platform-ready content, or experiment with visual storytelling without endless editing rounds. It feels like having a collaborative director who understands exactly what you mean and delivers results fast enough to keep momentum alive.

Key Features

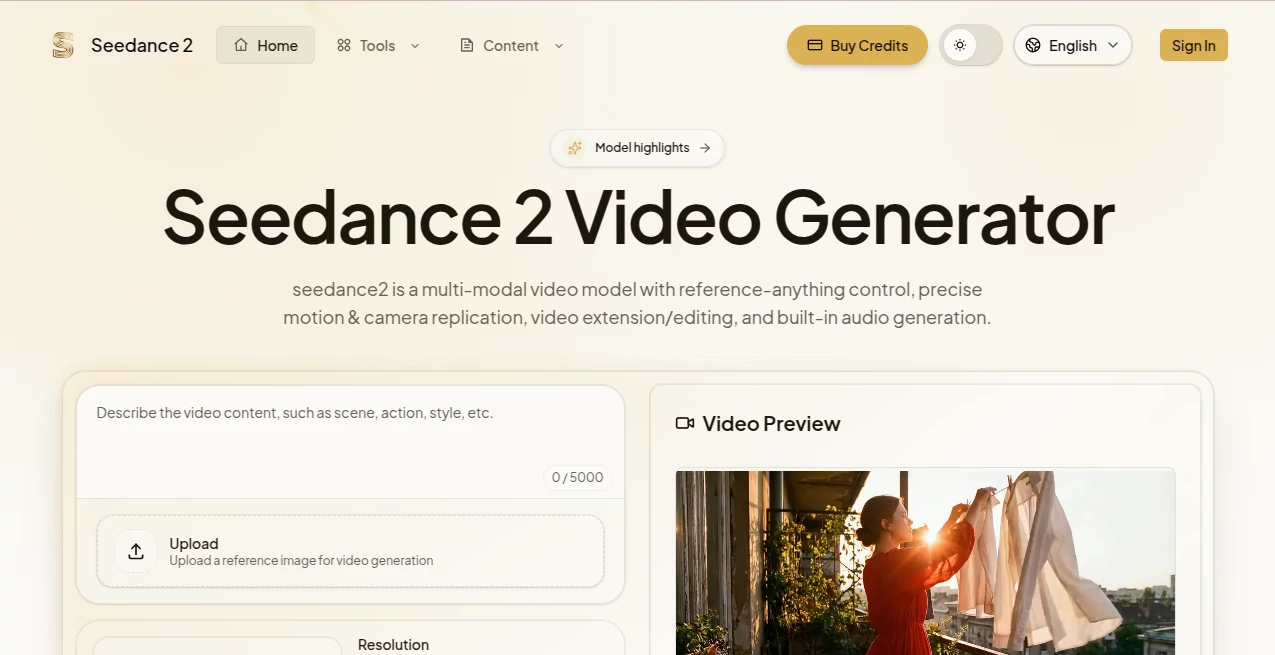

User Interface

The workspace is intentionally uncluttered. You add your main prompt, attach up to nine images, three short video clips (totaling 15 seconds), and three audio files, then assign roles using simple @ tags—first frame, last frame, style reference, subject consistency, motion path, camera language, or audio sync. A preview area shows your assets clearly, and generation starts with one click. It’s the sort of layout that lets you focus on creativity rather than figuring out controls; even first-timers find the rhythm quickly.

Accuracy & Performance

Consistency is where it really earns its keep—characters, outfits, products, and typography remain stable across shots even in dynamic scenes. Motion follows natural timing, camera language replicates complex moves, and audio aligns precisely with visuals. Generations typically finish in minutes, and the output quality holds strong at higher resolutions when supported. In practice, prompts that describe clear actions and moods tend to produce first-try winners, which saves a tremendous amount of iteration time.

Capabilities

It handles text-to-video, image-to-video, and video-to-video workflows with multi-shot storytelling in mind. Reference-anything control lets you dictate style, subject look, motion rhythm, camera behavior, or audio elements. Extend existing clips, edit shots while preserving continuity, or generate dialogue, ambience, and sound effects synced to the picture. Duration ranges from 4 to 15 seconds, giving enough room for mini-narratives or social-ready hooks without overwhelming complexity.

Security & Privacy

Your inputs—prompts, images, clips, audio—stay processed securely and are not retained longer than necessary. The system is designed with creator trust in mind, so you can experiment with personal projects or client concepts without lingering worries about data exposure.

Use Cases

A content creator sketches a quick product showcase, references existing footage for style and subject, and ends up with a smooth multi-shot reel that feels professionally shot. A filmmaker prototypes a key scene with specific camera moves and emotional beats, testing pacing before full production. A marketer builds brand-consistent ads by locking in product appearance and tone across shots. Even hobbyists turn weekend ideas into shareable clips that capture the mood they imagined. The ability to maintain visual and narrative continuity makes it especially valuable when storytelling matters more than raw length.

Pros and Cons

Pros:

- Outstanding subject and style consistency across multiple shots.

- Reference-anything control gives precise creative direction.

- Built-in audio generation and sync add polish without extra steps.

- Fast turnaround keeps the creative flow uninterrupted.

Cons:

- 15-second limit keeps focus on shorts rather than longer narratives.

- Best results come from clear, intentional inputs and references.

Pricing Plans

It keeps the entry point open so anyone can try the core experience without upfront commitment. Paid plans unlock higher resolutions, longer queues, more concurrent generations, and additional credits as your needs scale. The structure rewards light experimentation at no cost while making heavier creative work sustainable and affordable.

How to Use Seedance Pro

Start with a descriptive prompt that outlines your story or scene. Attach supporting assets—images for style or subject, short clips for motion or camera reference, audio for sound guidance. Use @ tags to assign roles (first/last frame, overall style, character consistency, etc.). Preview your inputs, then generate. Review the result, extend shots if needed, or edit specific segments while keeping continuity. Download the finished clip and share or refine further. The loop is quick enough to iterate several ideas in one sitting.

Comparison with Similar Tools

While many generators excel at single shots or basic transitions, this one stands out in multi-shot coherence and reference-driven control. It handles complex storytelling beats—camera language, motion rhythm, subject persistence—more naturally than tools that require heavy post-production fixes. The integrated audio sync is another edge, reducing the need for separate editing steps. For creators who value narrative flow over sheer duration, it often feels like the more thoughtful choice.

Conclusion

Video creation should feel liberating, not laborious. This tool quietly removes many of the old barriers—style drift, motion awkwardness, sound mismatches—so the focus stays on the story you want to tell. When a simple prompt plus a few references turns into a clip that looks and feels directed, it’s hard not to get excited about what’s next. Whether you’re building content daily or exploring ideas on weekends, it’s a companion that respects your vision and delivers results worth sharing.

Frequently Asked Questions (FAQ)

How long can the videos be?

4 to 15 seconds—long enough for meaningful scenes and platform-ready shorts.

What inputs can I use?

Text prompt + up to 9 images, 3 videos (≤15s total), 3 audio files (≤15s total).

Does it keep characters consistent?

Yes—strong subject and style locking across shots is a core strength.

Can it add sound?

Built-in generation for dialogue, ambience, and effects synced to visuals.

How fast are generations?

Typically minutes, depending on complexity and current load.

AI Animated Video , AI Image to Video , AI Video Generator , AI Text to Video .

These classifications represent its core capabilities and areas of application. For related tools, explore the linked categories above.

seedance2 details

Pricing

- Free

Apps

- Web Tools