🧠 AI Quiz

Think you really understand Artificial Intelligence?

Test yourself and see how well you know the world of AI.

Answer AI-related questions, compete with other users, and prove that

you’re among the best when it comes to AI knowledge.

Reach the top of our leaderboard.

ai seedance 2.0

What is ai seedance 2.0?

Picture this: you type a short scene description, maybe attach a reference photo or clip, hit generate—and thirty seconds later a little cinematic moment plays out with lighting that feels intentional, motion that tracks naturally, and even lip-sync when there's dialogue. It’s the kind of output that makes you lean in and watch again because it doesn’t look like “AI video” in the usual sense. Friends who normally dismiss generated clips have paused mid-sentence when I’ve shown them these; the coherence and subtle camera language just hit differently. That’s what keeps creators coming back—it feels like a collaborator who actually understands story rhythm.

Introduction

Video remains one of the most demanding creative mediums—storyboarding, shooting, editing, sound design, all take time and often money. This platform collapses huge parts of that workflow into one intelligent step. Start with words, add images or audio for guidance, and get a short, emotionally coherent clip that carries real cinematic weight. The model grasps narrative flow, lighting continuity, character consistency, and subtle performance in ways that feel almost intuitive. Early users started sharing side-by-sides—text prompt vs final clip—and the jump from flat description to living scene keeps surprising people. For anyone who thinks in moving pictures but lacks a full production pipeline, it opens doors that used to stay firmly closed.

Key Features

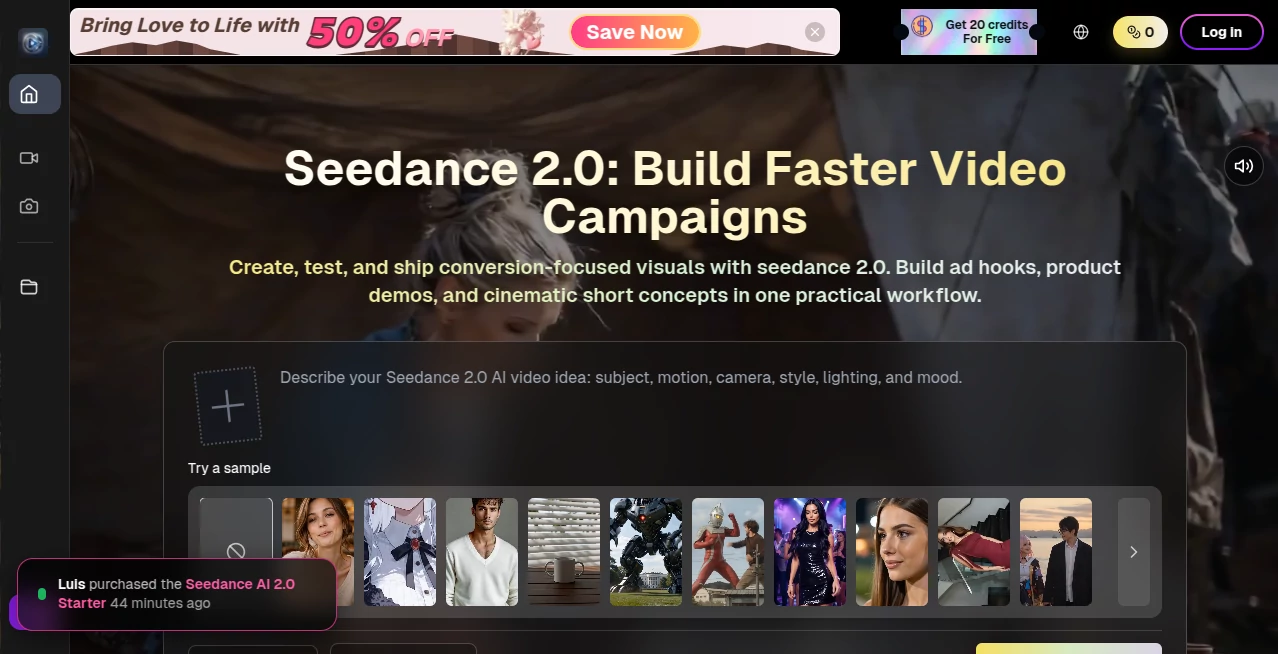

User Interface

The workspace is clean and focused—no visual clutter. A wide prompt field, optional image/video/audio upload zones, simple toggles for aspect ratio, duration, and style strength, then one clear “Generate” button. Previews load fast enough to keep you iterating rather than waiting. It respects your attention: everything essential is visible, nothing extraneous is in the way. Beginners finish their first clip quickly; experienced creators appreciate how little friction exists between idea and result.

Accuracy & Performance

Characters remain the same person across shots and lighting changes—same face structure, wardrobe, hair movement. Physics behave: cloth ripples naturally, hair catches wind, objects fall realistically. Complex multi-character prompts with camera movement rarely break coherence. Generation times stay comfortable (often 20–60 seconds), and the model avoids the usual pitfalls of melting faces or impossible jumps. When it does misfire, it’s almost always traceable to an unclear or conflicting prompt rather than random hallucination.

Capabilities

Text-to-video, image-to-video, hybrid (image + text + audio guidance), multi-shot narrative flow, native lip-sync for dialogue, music-aware motion, multiple aspect ratios (vertical for social, horizontal for trailers), and strong support for stylized looks alongside photoreal. You can guide with reference images for character consistency, short clips for motion style, or audio for perfect beat sync. It handles emotional close-ups, product reveals, dialogue scenes, music videos, and even subtle animation styles with surprising fidelity.

Security & Privacy

Inputs are processed ephemerally—nothing is retained for training or long-term storage unless you explicitly save the output. No mandatory account for basic use, no sneaky data harvesting. For creators working with client concepts, personal projects, or brand-sensitive material, that clean boundary provides real reassurance.

Use Cases

A skincare brand turns one product photo into a dreamy 8-second application clip that outperforms their previous live-action ads. An indie musician creates an official visualizer that actually matches the song’s emotional arc instead of generic loops. A short-form creator builds consistent character-driven Reels without daily filming. A filmmaker sketches key emotional beats to test tone before full production. The common thread: people who care about storytelling and mood, not just motion, and need results fast.

Pros and Cons

Pros:

- Outstanding character and style consistency across shots—rare at this quality level.

- Cinematic motion and lighting choices that feel thoughtfully directed.

- Hybrid guidance (text + image + audio) gives precise creative control.

- Generation speed that supports real creative iteration instead of waiting games.

Cons:

- Clip length caps at around 5–10 seconds (though multi-shot workflows extend storytelling).

- Extremely abstract or contradictory prompts can still confuse it (same as most models).

- Higher resolutions and priority queues live behind paid plans.

Pricing Plans

A meaningful free daily quota lets anyone experience the quality without commitment—no card required to start. Paid plans unlock higher resolutions, longer clips, faster queues, and unlimited generations. Pricing feels balanced for the leap in output fidelity; many creators find that one month covers what they used to spend on freelance editors or stock footage for a single campaign.

How to Use Seedance 2 Video

Open the generator, write a concise scene description (“golden-hour beach walk, young woman in white dress turns to camera and smiles softly”). Optionally upload a reference image or short clip for stronger visual/motion grounding. Select aspect ratio (vertical for Reels, horizontal for trailers) and duration. Press generate. Watch the preview—adjust wording or reference strength if the feel isn’t quite there—then download or create variations. For longer narratives, generate individual shots and stitch them in your editor. The loop is fast enough to refine several versions in one sitting.

Comparison with Similar Tools

Many models still produce visible drift, uncanny faces, or lighting jumps between frames. This one prioritizes narrative flow and cinematic intent, often delivering clips that feel closer to human-directed work. The hybrid input mode stands out—letting you steer with text, images, and audio together gives more director-like control than most alternatives offer.

Conclusion

Video creation has always been expensive in time, money, or both. Tools like this quietly lower that barrier so more people can tell visual stories without compromise. It doesn’t replace human taste or vision—it amplifies them. When the gap between “I have an idea” and “here’s the finished clip” shrinks to minutes, something fundamental shifts. For anyone who thinks in moving pictures, that shift is worth experiencing.

Frequently Asked Questions (FAQ)

How long can generated clips be?

Typically 5–10 seconds per generation; longer storytelling is possible by combining multiple shots.

Is a reference image required?

No—text-only works very well—but adding one dramatically improves consistency.

What resolutions are supported?

Up to 1080p on paid plans; free tier offers lower-res previews.

Can I use outputs commercially?

Yes—paid plans include full commercial rights.

Watermark on free generations?

Small watermark on free clips; paid removes it completely.

AI Animated Video , AI Image to Video , AI Text to Video , AI Video Generator .

These classifications represent its core capabilities and areas of application. For related tools, explore the linked categories above.

ai seedance 2.0 details

Pricing

- Free

Apps

- Web Tools