🧠 AI Quiz

Think you really understand Artificial Intelligence?

Test yourself and see how well you know the world of AI.

Answer AI-related questions, compete with other users, and prove that

you’re among the best when it comes to AI knowledge.

Reach the top of our leaderboard.

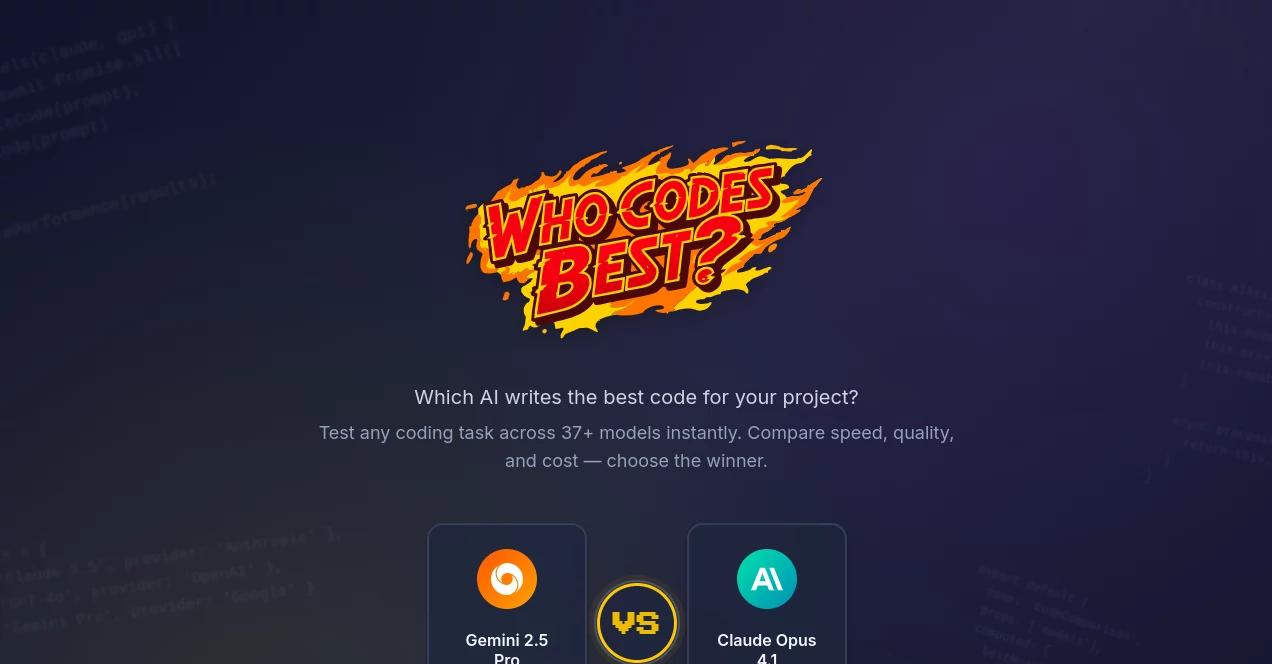

Who Codes Best

Compare AI Coding Agents

What is Who Codes Best?

Who Codes Best steps in as a no-nonsense arena for pitting top coding helpers against each other, letting developers see which one truly delivers the cleanest, quickest scripts for their projects. This hub pulls together dozens of these smart assistants, running head-to-head tests on real tasks to spotlight winners based on how well they nail the job. Folks in the trenches—from indie coders to team leads—turn to it when picking a sidekick that won't let them down mid-sprint, often sharing stories of how a simple matchup saved hours of trial and error.

Introduction

Who Codes Best kicked off a bit over a year ago, born from the headaches of developers juggling too many shiny new tools without a clear champ in sight. The creators, a mix of coders and tech tinkerers, wanted a spot where anyone could drop a prompt and watch the showdown unfold, no setup required. It caught fire fast in online forums, with users swapping tales of surprise upsets—like underdogs outshining big names on quirky bugs. Today, it boasts tests across more than three dozen players, updating daily to keep pace with the whirlwind of releases, and has become that reliable referee for anyone tired of the hype and ready for hard numbers.

Key Features

User Interface

The landing page hits you with a crisp overview—stats on matchups and contenders right up top, no fluff. Pick a task or type your own, and a clean grid lights up with side-by-side outputs, scrolling smooth as you dig into diffs or scores. Buttons for fresh runs or saved faves keep things snappy, and the whole thing feels light on any screen, like chatting with a buddy who's got all the receipts handy.

Accuracy & Performance

When you fire off a prompt, results land true to form, mirroring what each tool would spit out in your own setup, down to the quirks in logic or flair. It clocks the full cycle from start to finish, often wrapping batches in under a minute even with heavy hitters, so you get verdicts without the drag. Devs nod to its track record, where top scorers consistently crank out bug-free bits that pass real-world checks.

Capabilities

It lines up over thirty-seven heavyweights, from fresh faces to old reliables, tackling everything from quick functions to thorny algorithms. Beyond raw generation, it crunches scores on how slick the code runs, how much it costs to call, and user votes on style. You can batch tests for patterns or submit wild cards to grow the pool, turning one-off curiosities into shared benchmarks that light up blind spots.

Security & Privacy

Your prompts and runs stay under wraps, processed on the fly without lingering in shared pots, giving you peace to experiment freely. It leans on standard shields for any backend calls, and since you're not plugging in personal repos, the risk stays low. Users appreciate the straightforward setup that skips the usual sign-up traps, keeping your workflow private as a solo debug session.

Use Cases

A startup crew benchmarks a lineup for their app's backend, landing on the speed demon that halves their prototype time. Solo hackers test tweaks for a game mod, picking the one that weaves in edge cases without extra elbow grease. Educators drop class prompts to demo strengths, sparking debates on what makes code 'good' beyond the textbook. Even hobbyists pit tools on weekend puzzles, uncovering gems that jazz up their side gigs.

Pros and Cons

Pros:

- Packs a crowd of contenders into one quick arena, no app hopping.

- Balances hard metrics with gut-check votes for well-rounded picks.

- Zero barriers to entry, ideal for dipping toes or deep dives.

Cons:

- Custom runs might echo API waits if traffic spikes.

- No deep dives into why a model flubs—leaves some 'aha' moments hanging.

- Free access shines, but heavy users might crave saved workspaces.

Pricing Plans

Everything rolls free at the gate, from basic matchups to full-field tests, with no tiers gating the good stuff. Behind the scenes, it covers its own tab through partnerships, so you just show up and spar. For power users eyeing bulk runs or custom dashboards, whispers of pro paths are in the works, but for now, it's all-access without the wallet hit.

How to Use Who Codes Best

Head over and scan the ready-made clashes, or punch in your puzzle to kick off a fresh round. Watch the lineup generate, then sift through the stacks—eyeball the diffs, check the tallies, and crown your champ. Submit winners or flops to the pool for others to chew on, and bookmark hot tasks for round two. It's that loop of test, tweak, triumph that hooks you in.

Comparison with Similar Tools

Where lone testers like playgrounds let you poke one at a time, this one throws the whole roster in the ring for instant verdicts. Against broad AI evals, it lasers on code craft, skipping chit-chat for syntax that sings. It edges out scattered blogs by baking in the battles live, though those might offer cozier write-ups for casual scrolls.

Conclusion

Who Codes Best boils down the buzz to bare-knuckle bouts, arming coders with proof over promises to pick tools that propel. It turns the guesswork of gear-ups into a game of gotchas, where every matchup uncovers edges that sharpen your edge. As the field keeps flooding with fresh faces, this corner stays the steady judge, calling shots that stick through the sprints.

Frequently Asked Questions (FAQ)

Which models get the spotlight?

A rotating roster of thirty-seven plus, from GPT flavors to Claude kin, all in the fray.

Can I toss in my own brain-teaser?

Drop it via the submit spot, and it'll join the jam for all to judge.

How do scores shake out?

A mix of timer ticks, quality checks, and crowd calls to keep it fair and fierce.

Is it just for pros?

Nah, from bootcamp buds to battle-hardened vets, anyone with a prompt fits right in.

What if my fave flops?

That's the beauty—spot the gaps, swap seats, and keep your code quest rolling.

AI Code Assistant , AI Code Generator , AI Developer Tools , AI Research Tool .

These classifications represent its core capabilities and areas of application. For related tools, explore the linked categories above.

Who Codes Best details

This tool is no longer available on submitaitools.org; find alternatives on Alternative to Who Codes Best.

Pricing

- Free

Apps

- Web Tools