🧠 AI Quiz

Think you really understand Artificial Intelligence?

Test yourself and see how well you know the world of AI.

Answer AI-related questions, compete with other users, and prove that

you’re among the best when it comes to AI knowledge.

Reach the top of our leaderboard.

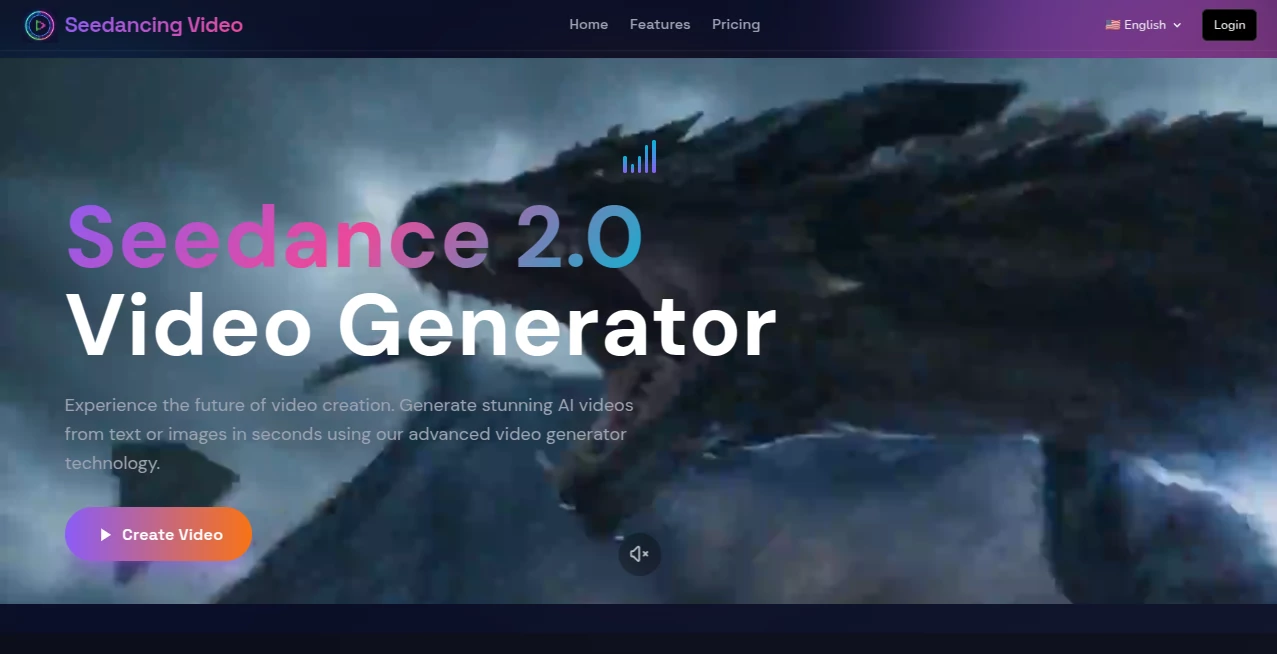

Seedance 2.0 AI

What is Seedance 2.0 AI?

You type a quick scene idea, maybe drop in a photo or short clip for reference, and a minute later a smooth little film plays back with lighting that feels right, motion that tracks naturally, and even lip-sync when there's dialogue. It's the sort of result that makes you lean in and watch twice because it doesn't look like typical AI output. I've had moments showing these clips to skeptical friends and seeing their eyebrows go up—they're used to the usual glitches, but this one holds together in a way that feels almost hand-directed. That level of coherence is what keeps people coming back.

Introduction

Creating video has always been time-heavy: storyboards, shooting, editing, sound design. This platform compresses a huge chunk of that into a single step. Start with words, add images or audio if you want more control, and get a short cinematic piece that carries emotion and continuity. The model understands how shots should flow, how light should fall, how characters should move—it's not just animating frames; it's trying to tell the story you intended. Early users share clips that started as rough ideas and ended up looking like polished teasers. For anyone who thinks visually but lacks a full production setup, that's a game-changer.

Key Features

User Interface

The layout stays out of your way. A wide prompt area for your description, a clean upload zone for references, simple toggles for aspect ratio and length, then one clear button to start. Previews arrive fast enough to keep you iterating instead of waiting. It's designed so you spend time refining your vision, not figuring out controls. Beginners get their first clip quickly; experienced creators appreciate how little noise there is between brain and output.

Accuracy & Performance

Characters remain consistent across camera moves and lighting shifts—same face, same outfit, same vibe. Physics behave: cloth ripples, hair catches wind, objects fall realistically. Generation times stay reasonable, often under a minute for short pieces, and the model rarely falls into the usual traps of melting limbs or impossible jumps. When it does miss, it's usually because the prompt was too vague or contradictory, not random chaos. That reliability lets you trust the output and focus on creativity.

Capabilities

Text-to-video, image-to-video, hybrid mode combining both, multi-shot storytelling with natural transitions, native audio sync for dialogue and effects, and support for multiple aspect ratios. You can guide with up to several references at once—photos for style, clips for motion, audio for timing—and the result weaves them into a coherent sequence. It's strong on emotional beats, subtle camera language, and keeping visual continuity across cuts, which makes it feel closer to real filmmaking than most AI video attempts.

Security & Privacy

Inputs are handled temporarily—processed and discarded after generation unless you save the output. No long-term storage of your prompts, images, or clips for training purposes. For creators working with client concepts, personal projects, or brand material, that clean separation provides real reassurance.

Use Cases

A small brand turns one hero product shot into a short lifestyle clip that feels like a real ad. A musician creates a visualizer that matches the song's emotional arc instead of generic loops. A short-form creator builds consistent character-driven Reels without daily filming. A filmmaker sketches key story moments to test tone before full production. The common thread is speed plus quality—getting something watchable and shareable without weeks of work.

Pros and Cons

Pros:

- Exceptional consistency in characters, style, and lighting across shots.

- Cinematic choices that give clips real mood and flow.

- Hybrid guidance (text + image + audio) for precise control.

- Fast enough generations that you can actually iterate creatively.

Cons:

- Clip lengths remain short (typically 5–10 seconds), though multi-shot helps build narratives.

- Very abstract prompts can still lead to unexpected results.

- Higher resolutions and priority access require paid plans.

Pricing Plans

Free daily credits let you test the quality without any commitment—enough to feel the difference. Paid plans open higher resolutions, longer clips, faster queues, and unlimited generations. The pricing feels balanced for the output leap; many creators find that one month covers what they used to spend on freelance editors or stock footage for a campaign.

How to Use Seedance 2 Video

Start with a clear, concise prompt describing the scene and mood. Upload a reference image or short clip if you want stronger grounding (highly recommended for character consistency). Choose aspect ratio and duration, then generate. Watch the preview—tweak wording or reference strength if the feel isn't quite there—and download or create variations. For longer stories, generate individual shots and stitch them in your editor. The loop is fast enough to refine several versions in one sitting.

Comparison with Similar Tools

Many competitors still produce visible inconsistencies, odd physics, or lighting jumps between frames. This one prioritizes narrative flow and cinematic intent, often delivering clips that feel closer to human-directed work. The hybrid input mode stands out—letting you steer with text, images, and audio together gives more director-like control than most alternatives offer.

Conclusion

Video remains one of the most demanding mediums—until tools like this arrive. They don't erase the need for taste or vision; they amplify it. When the distance between an idea in your head and a watchable clip shrinks to minutes, storytelling becomes more accessible. For creators who think in motion, that's a quiet revolution worth exploring.

Frequently Asked Questions (FAQ)

How long can generated clips be?

Usually 5–10 seconds per generation; longer narratives come from combining multiple shots.

Is a reference image required?

No—text-only works well—but adding one dramatically improves consistency.

What resolutions are available?

Up to 1080p on paid plans; free tier offers preview-quality.

Can I use outputs commercially?

Yes—paid plans include full commercial rights.

Watermark on free generations?

Small watermark on free clips; paid removes it completely.

AI Animated Video , AI Image to Video , AI Video Generator , AI Text to Video .

These classifications represent its core capabilities and areas of application. For related tools, explore the linked categories above.

Seedance 2.0 AI details

Pricing

- Free

Apps

- Web Tools